*Editor’s note: This review was coordinated and submitted by Rokslide Member @Tahoe1305 It’s Rokslide’s mission to offer the most unbiased reviews possible and when we read this one, it definitely fit the mission. The author doesn’t claim that this is the most scientific objective review, but we thought there was much value in the results. We hope you enjoy the review:

Part I: Purpose and Evaluation Methodology

Purpose:

Many of us wonder what upgrading optics actually “buys” us? We’ve all heard that just looking through optics in your local sports store is ineffective. The intent of this crowd-sourced optics comparison was twofold. First, it allowed folks to spend time behind a variety of optics to see for themselves if an upgrade is worth it. Second, it allowed regular folks to compare optics as objectively as possible.

My personal objectives were to see if upgrading to Swaro’ 12×42 NLs was worth the roughly $1,000 over my tried-and-true Swaro’ 12×50 ELs. Additionally, I was curious about the new set of mini spotters from companies like Vortex, Maven, and Swaroviski that hit the market in the last 2-3 years. I wanted to see just what place they had in a hunt vs the larger spotters.

Methodology

About a month ago, I put out a solicitation on the Rokslide forum for folks in the greater Denver area of Colorado to get together with their own optics to compare as a group. We had about 15 folks volunteer to participate. Additionally, we had a number of folks offer to send some of their optics in for comparison. Robby Denning was able to push us a Swaro’ ATC he’d been using, but we already had most of the other optics (barring just a few) from local folks. I didn’t limit the optics to be compared, although I preferred to keep the number small and was less concerned about the 80mm+ spotters and more interested in the smaller stuff.

On the third link-up attempt, the crew was able to gather on Saturday, February 17th, at 2:30 p.m. There were a number of things I “failed” at and regret (more on those later), but the first one was not doing an accurate head count and getting a photo with all of us there. I recall there being approximately ten folks, though, and an estimated 20-30 pieces of glass.

As you can see in the attached photos and onX screenshot, we sat up on a hill at the edge of a parking lot, looking down over a residential valley. We had three primary “targets”.

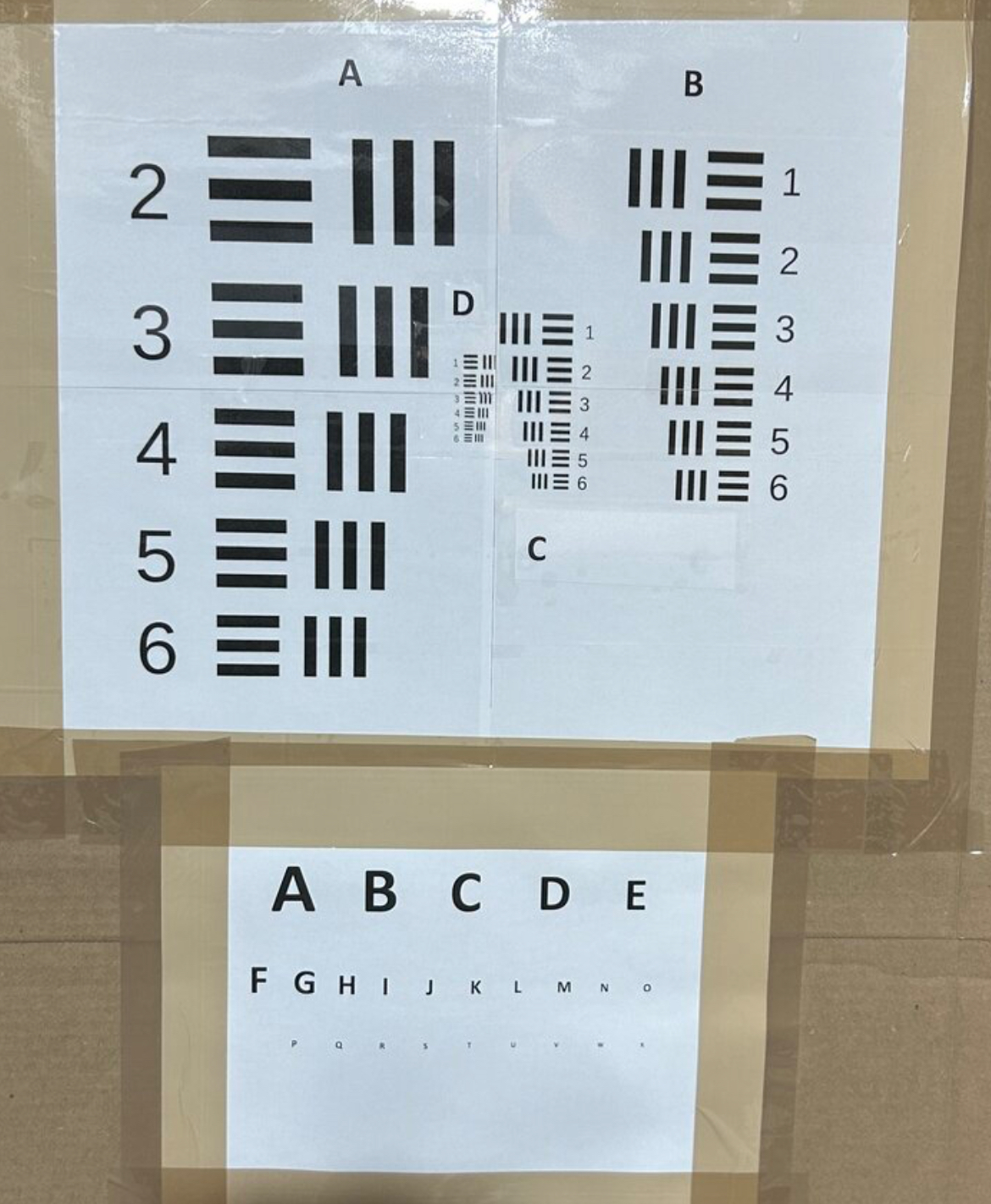

- The first resolution chart (see photo above) was placed at 400 yards. It had a string of medium-sized colored Christmas lights around the tree from which it was hanging. We used the colored lights to assist with judging the optics’ color hues.

- The second similar resolution chart was placed in the shade of a tree at 600 yards.

- The last target was a full-size 10-point white-tail deer 3D archery target that was placed on a hill in the shade at 1130 yards.

The Process

The task was to use the provided charts, deer, and fence posts to compare the optics as objectively as possible. The deer was used to confirm if tines could be counted at distance or if only the body of the deer could be seen (if at all). The fence posts were from a baseball field at approximately 500 yards and were perfect for counting and comparing the field of view (FOV).

For the resolution charts (both near and far), we attempted to view the smallest resolution element with the center of the optic. This was accomplished by looking at the chart until you can’t differentiate between white and black. The smallest element number was recorded. We then moved the chart to the outer 10% edge of the FOV and reassessed for resolution loss or edge-to-edge clarity. To this point, I would argue these tests are about as objective as we can get in a “semi-controlled” environment. The other tests involved color and chromatic aberration (CA), which were less objective, and we found ourselves comparing to each other versus a chart. None of us saw any great amounts of CA in the areas we were viewing, so I hid that element from the comparison worksheet.

Most of us spent until roughly official sunset or approximately 5:30 p.m. looking at the targets: we had what I would estimate a solid two hours in full sun. Most of us did notice that at approximately 4 p.m., the sun passed behind the west range and cast shadows on all the targets.

Below are a handful of items I admit should have been done better:

- Photo and headcount of everyone

- Instructions on how to use the charts (provided, but not everyone was there concurrently)

- Not having a chart printout on hand (digital only)

- Not having enough daylight for non-low light comparison with all optics

- Not having the worksheets filled out similarly (zoom levels, time, etc.)

- Lighting on the deer changed halfway through our test period

- Not taking a photo through every spotter

- Not covering ALL options (Again, it was crowd-sourced…I get it if your optic wasn’t compared)

Part II: Findings

As mentioned previously, not everyone could provide consistent objective data for the findings. I attribute this to a few things:

- Not everyone came there with the goal of objectively comparing and didn’t fill out a worksheet.

- Some showed up at different times and likely didn’t even know a worksheet was there to fill out.

- Others may have missed the directions to fill it out and left off some critical pieces of info.

In all, we had three fairly comprehensive worksheets to compile. I have done similar comparisons at least three other times; therefore, my primary focus was simply data collecting. This can be seen with the 22 variations on the worksheet. We did get two other folks (no names on sheets) who looked at a few optics that I missed, as well as a few of the same optics. I was happy to see consistencies with the same optics and then appreciated their other optics data that I didn’t collect.

I’m not saying this data is perfect. I tried to apply the same visibility standard to all. But we all have biases. I didn’t adjust any of the data, but in Part III of this analysis, I will give my opinion on why or how a few of the data points may be a tad inconsistent with what some may have thought. Additionally, as much as I hoped this would be a perfect experimental environment, it was not. Uncontrollable items such as light wind, mirage, and individual visual acuity affected the results. That said, I would treat the objective data as a +/-1 level that is equal to or very close to one another. Consider this an error margin.

For the spotters, I personally felt it important to test on the minimum and maximum allowable zoom versus all the same. The reason for this is simple. If I am considering a different optic, I am not limited to an intermediate zoom; I can use the max, and it “may” benefit me. I see value in both metrics, though, and in some cases, other folks compared them all at the same zoom, which is awesome.

To make the data a bit more digestible, I converted the four chart elements (A, B, C, D / 1-6) into simple number values. I started with one at the smallest element and counted up as the size increased to 23. This way, folks can see “levels” of loss and easily see which optic performed best. The smaller number is better except for FOV, which is the number of counted fence posts (larger is better). “Not visible” meant we couldn’t distinguish the resolution elements for that test or distance.

I think the compiled worksheet speaks for itself, but I do have a few thoughts that are worth highlighting…spoiler alert: there aren’t many surprises here:

- None of the binos (with the exception of one person’s notes about the 18x Mavens) could break out the deer’s antlers at 1130 yards. A spotter was needed, and all the spotters could break out the tines (see note at end on one potential reason for this).

- Zoom is king. I’ve learned this before, but in nearly every situation, the optic with the higher zoom (in the category of binos/spotters) was able to resolve more.

- Every optic lost resolution at the edges; the “alpha” optics, in general, lost less.

- FOV directly correlates with magnification in nearly every situation. There were a few outliers, but nothing was drastically wider than others.

- For my comparison of the 12x NLs vs the 12x ELs, I thought they were nearly identical (and the numbers agree). The NL does have a tad more “pop” in color, it is easier to hand hold, and is much lighter. I looked through them again today in the same spot and actually got annoyed by some very minor blackouts with the NLs. I “think” it is the inner pupil distance being a bit wider on the NLs. To avoid blackout, I had to… a) bring out the eye cups, which affected the wide FOV, or b) squeeze my nose a bit, which was uncomfortable. I will mess with them some more because I want to want those NLs…they are sweet.

- We didn’t take weights of the optics (but we can if anyone is curious). Reason being is most of what we had was all configured slightly differently (objective covers, socks, covers, etc) and it wasn’t worth stripping everything down for apples-to-apples comparison. I have found for the most part on the higher end stuff the online specs are close enough for decision making.

The photos provided are, unfortunately, only of the remaining spotters I have on hand. All are taken with a super fancy Amazon adapter (it actually works awesome and is universal) with an iPhone 14 zoomed at 1.5x from the same tripod. I did use “live” to pick the cleanest frame for all because it was a tad windy. Nothing else was modified. And yes, it looks way better to the naked eye (as seen in the compiled worksheet data).

Part III: General Thoughts

You get what you pay for…But what do you really get?

We all want the best optic for the least amount of coin. But frankly, that doesn’t exist. It doesn’t mean there isn’t a good balance of “value” out there (I am happy to give my opinions), but in general, the more you pay, the better the viewing experience will be. But what do you get for more cash? I think you get two things based on this test and others I have done.

- First, you get edge-to-edge clarity. Most “mid-level” optics will resolve close to the same as alpha (within a level or two) in the center. The alpha glass separates itself on the edges, though.

- Second, the coatings and glass on the higher-end optics perform better in low light. It is obvious pretty quickly as the sun sets and the shadows roll in. How much? It depends, but I would estimate an average of 10-15 minutes of equal resolution.

Mini spotters have a spot in my bag.

I was really excited to check out these mini-spotters. The main reason is I am a weight weenie. Not sure why…I think I am just analytical and obsessed with saving weight. The mini spotters clearly do this and save space, but at what cost?

As shown, the three minis really hung pretty well with the 65mm spotters. Maybe I lost one or two resolution elements (mainly due to zoom limitations), but I am confident I can judge a trophy out to about a mile with them. No, you are likely not counting rings, but for most of us, that is a small percentage of our hunting. As the charts show, the Swaro ATC is special. Robby and a few others, in comparison, have already mentioned that, but I think I can speak for the group when I say it was likely the most impressive piece we had in the entire test.

Swarovski NLs are amazing.

This all started for me as a search to upgrade to the latest and greatest bino. But like I mentioned, I want to know what I am getting for my hard-earned money. I will say optically, I am not getting much more than the ELs. What I am getting is a close-to-equal viewing experience in a lighter, smaller package. Which, if I can make them work for me (inner pupil thing), then I am all in.

Quality Control and Sample Size Matter.

I, like many, will find it odd that some of the cheaper optics performed as well as the more expensive ones. I have seen this personally a lot and think it can be attributed to a few things. Companies like Vortex can produce an optic with great resolution, but what typically suffers are colors and edge clarity. Additionally, fit and finish are typically not as good as the more expensive brands. Lastly, and most importantly, the individual sample can be extremely important. All companies put out good and bad product samples, but some companies I believe struggle more with quality control; sometimes you get a winner and other times not. It can be impossible to know what you have without comparison.

Closing Thoughts

Feel free to ask me or anyone in the group some questions at the link below. We prefer not to field any hate on “why you didn’t have xxx optic.” Trust me, we wanted to….we just didn’t. Additionally, you can disagree with the data provided, but you also didn’t make the drive to see for yourself, so be nice!

I would consider this review just another data point in a bigger dataset to help inform the end user. The decision on upgrading is purely up to you. After all, what we value and how our eyes see the world are all different.

Lastly, I highly encourage other members on Rokslide to put together similar comparisons, maybe less for the objective data and more to give folks opportunities to experience a wide variety of optics in a relevant field setting. Plus, you get to know some of the folks you converse with on the forum! Thanks for reading.

Comment or ask Phillip questions here.